I am Jian Lang, currently a master candidate in Software Engineering at the University of Electronic Science and Technology of China (UESTC), under the supervision of Prof Fan Zhou. Before that, I received my Bachelor of Engineering degree from Fuzhou University.

My research mainly focuses on robust, reliable, and stable multimodal systems that can perform effectively under imperfect multimodal data, especially when facing missing modalities, distribution (domain) shifts, and data or label scarcity. And I am also interested in video analysis, detection, and large multimodal models for some applications.

Feel free to contact me if you have any questions about my research or potential collaboration opportunities.

🔥 News

- 2025.11: 🎉🎉 3 Papers are accepted by KDD 2026! See you in Jeju!

- 2025.11: 💦💦 3 Papers are submitted to CVPR 2026. Hope a wonderful result.

- 2025.10: 🎉🎉 Get Postgraduate National Scholarship again.

📝 Selected Publications (*=Equal Contribution, †=Conresponding Author)

🛡 Robust Multimodal Learning

⚓ Robust Against Domain (Distribution) Shift

🧩 Robust Against Missing Modalities

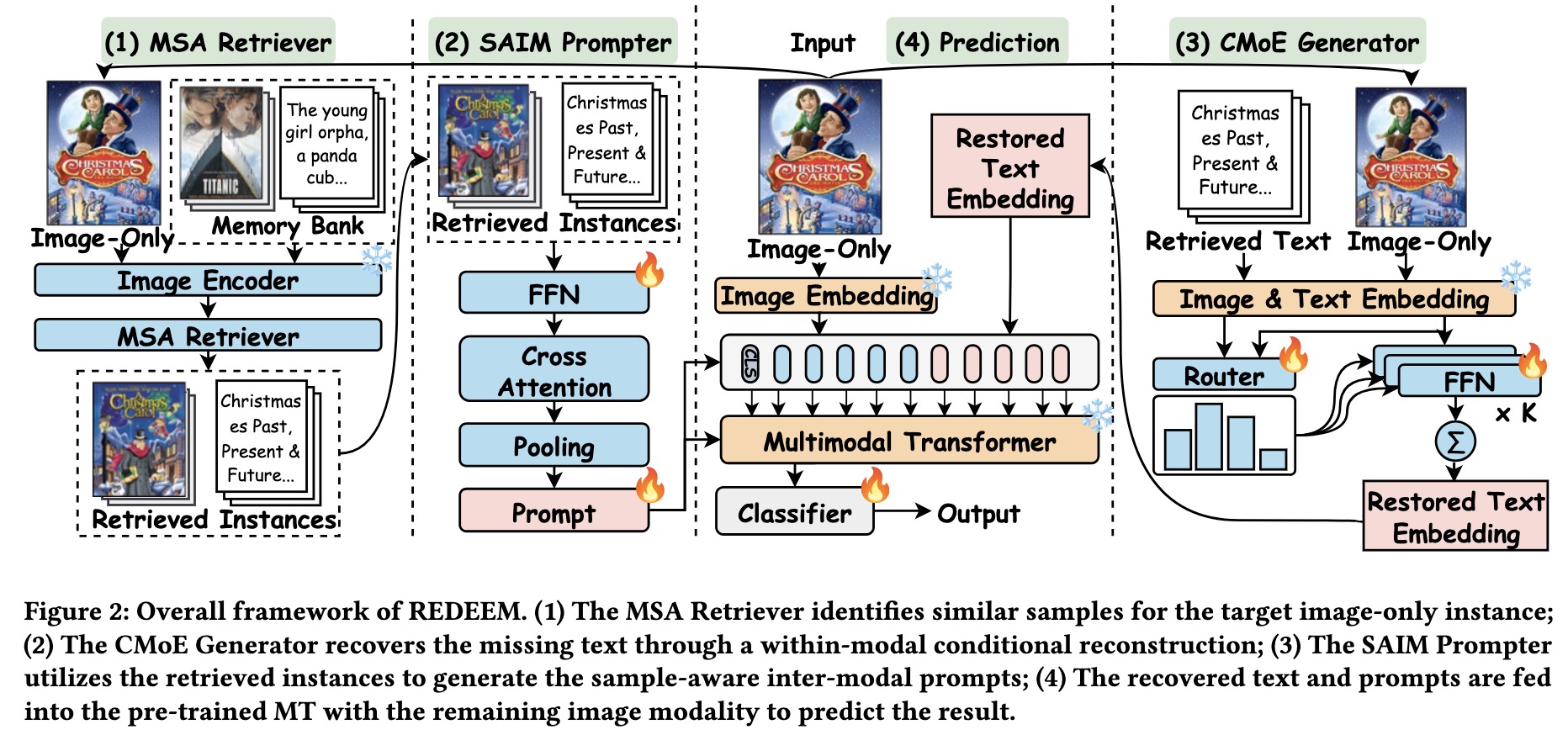

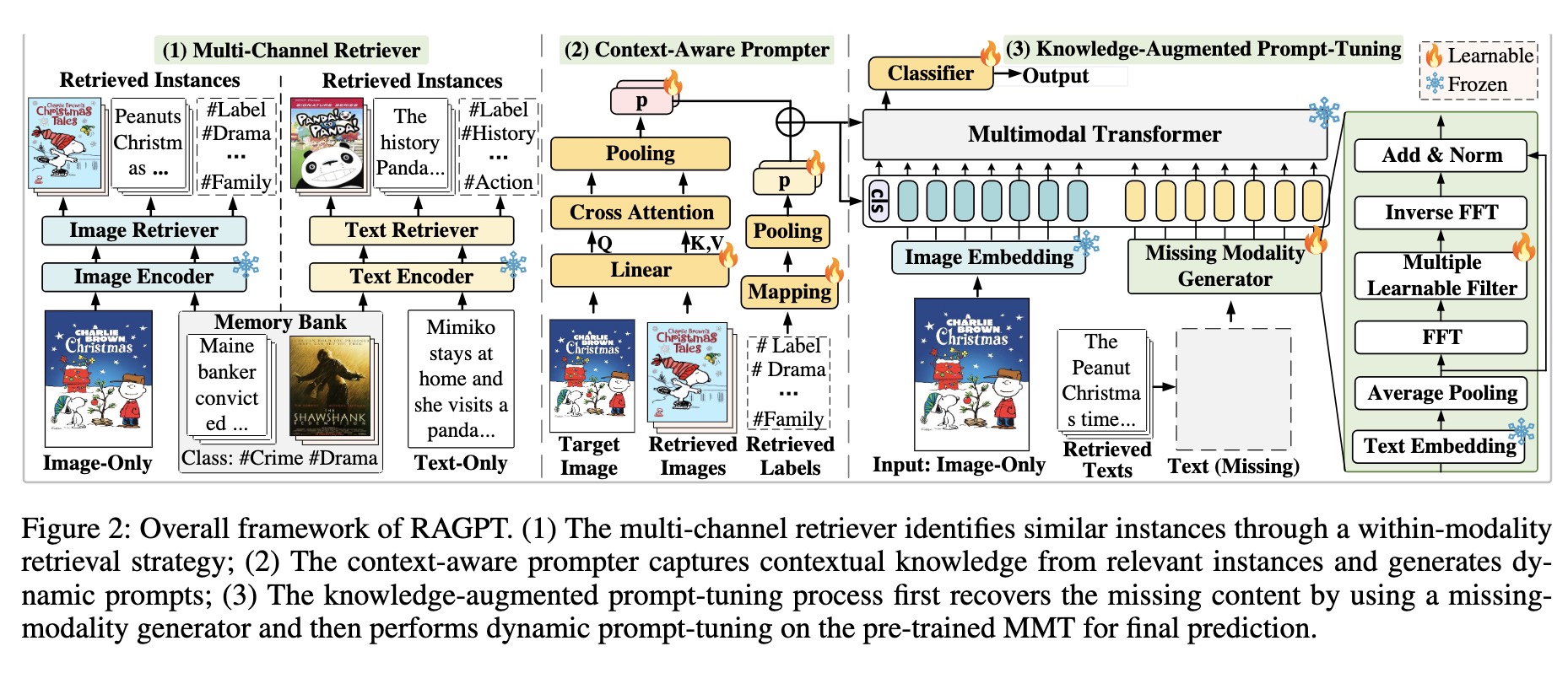

Retrieval-Augmented Dynamic Prompt Tuning for Incomplete Multimodal Learning

Jian Lang*, Zhangtao Cheng*, Ting Zhong, Fan Zhou†

AAAI 2025 | CCF A | PDF | Github |

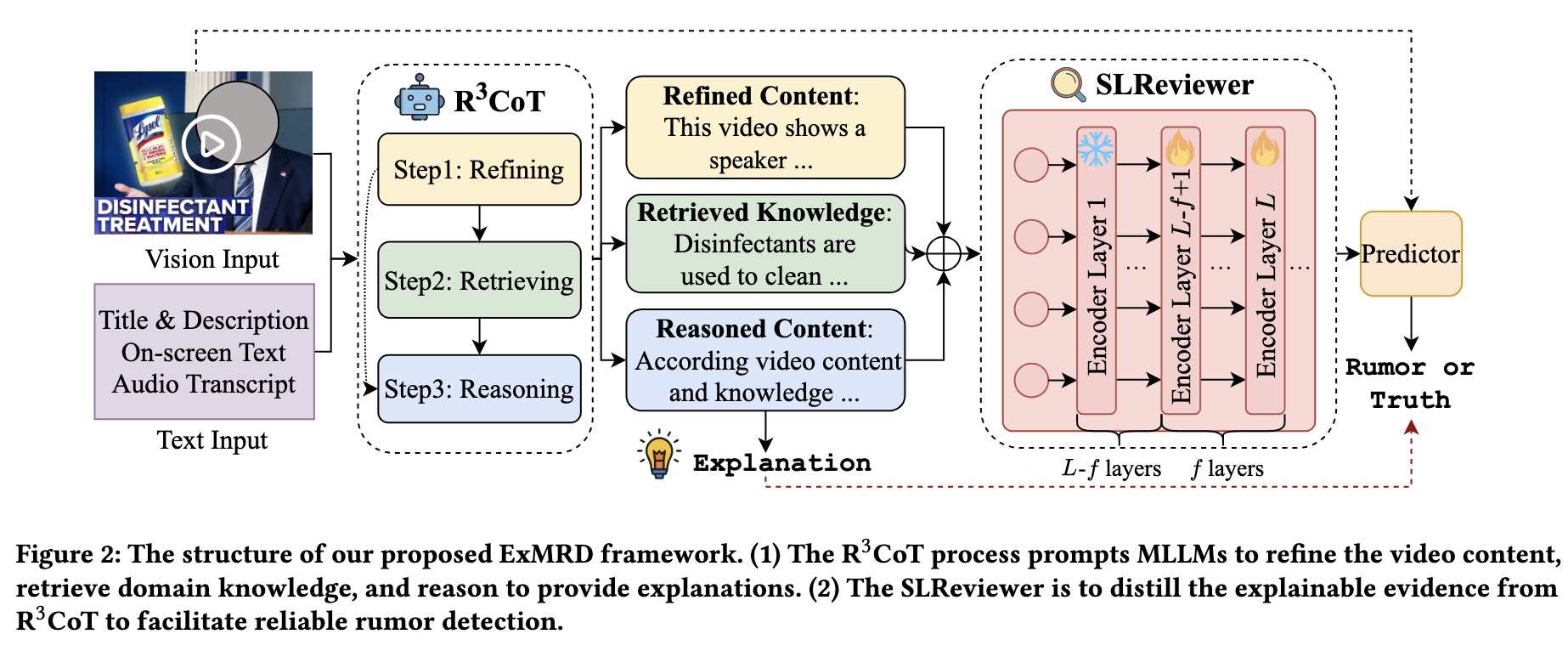

- RAGPT, a novel retrieval-augmented dynamic prompt-tuning framework for enhancing the modality-missing robustness of pre-trained Multimodal Transformer.

🪙 Robust Against Data / Label Scarcity

🎥 Video Analysis & Detection

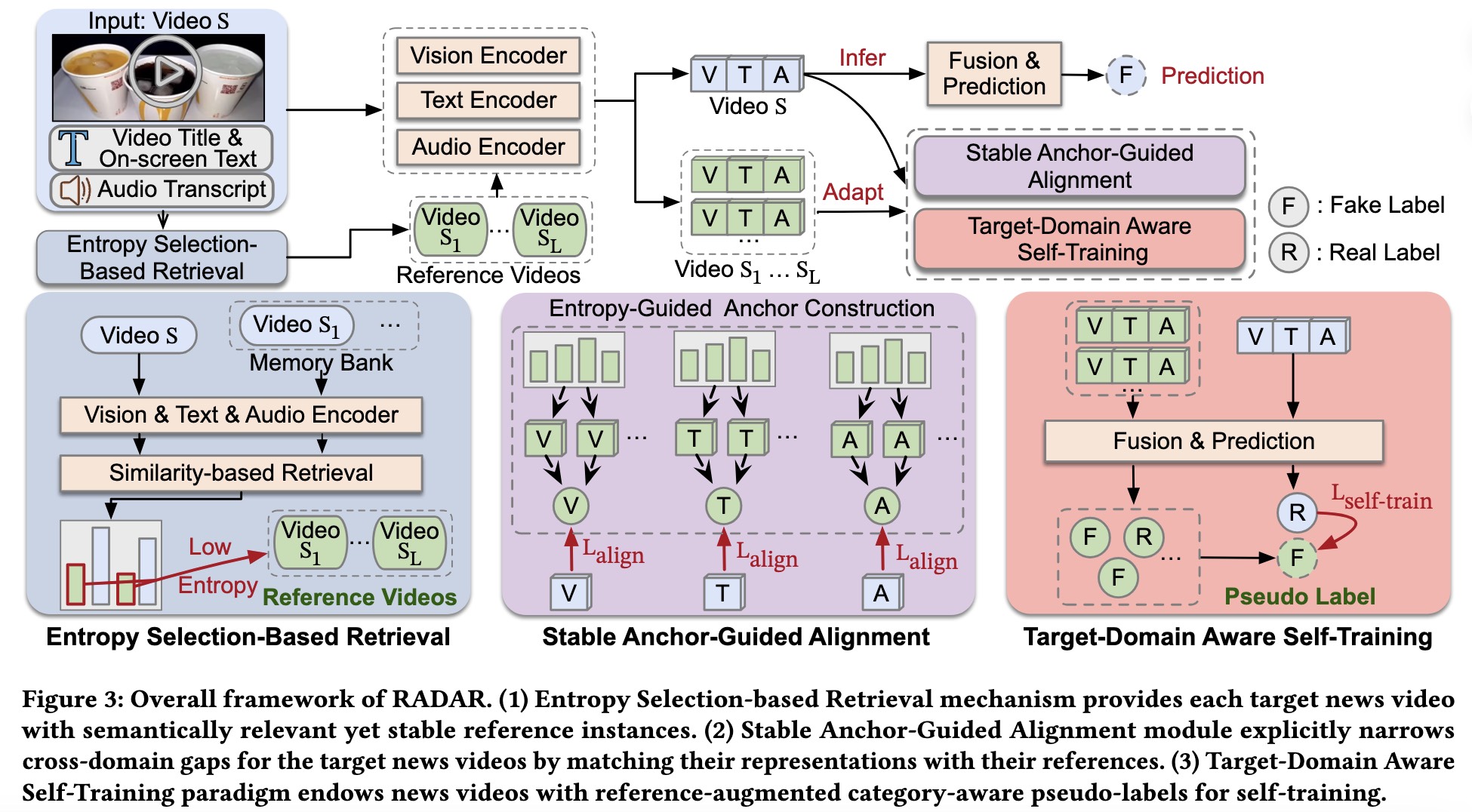

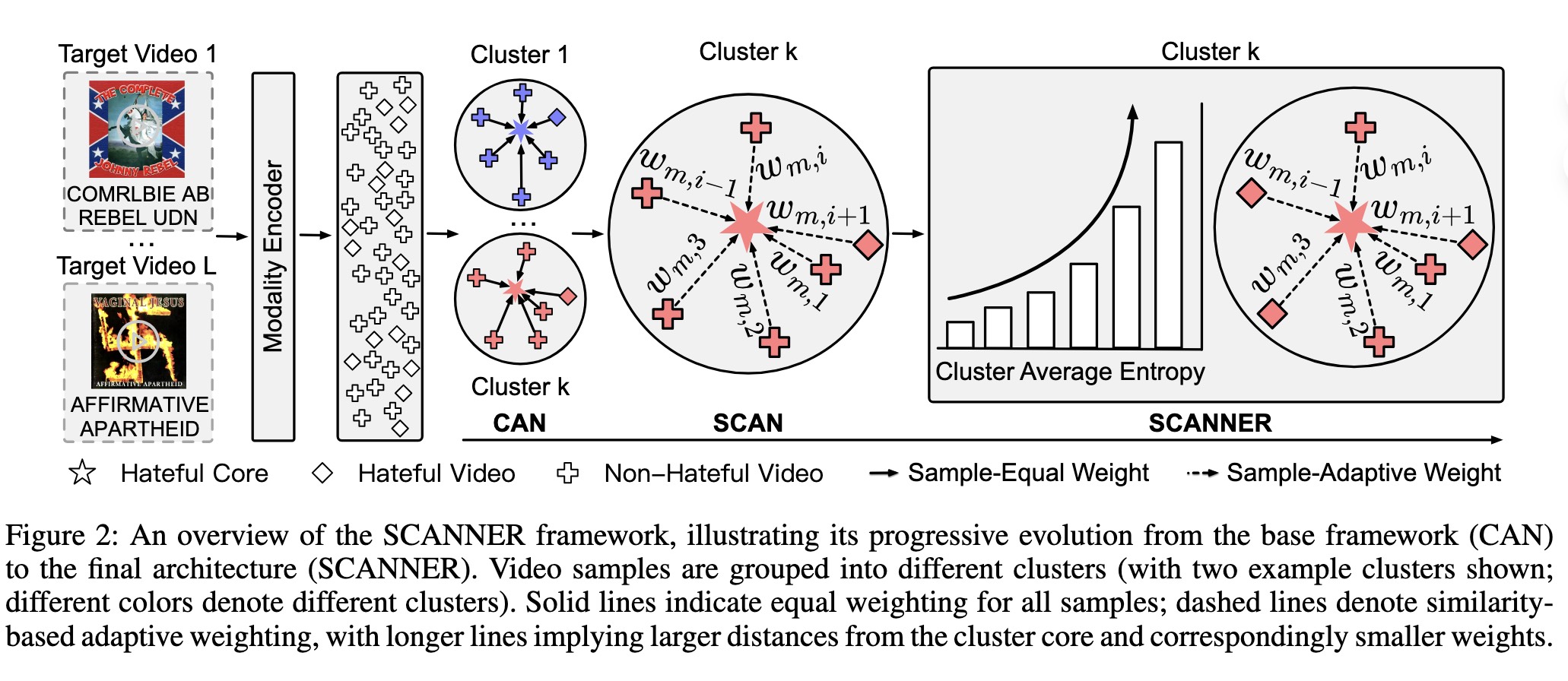

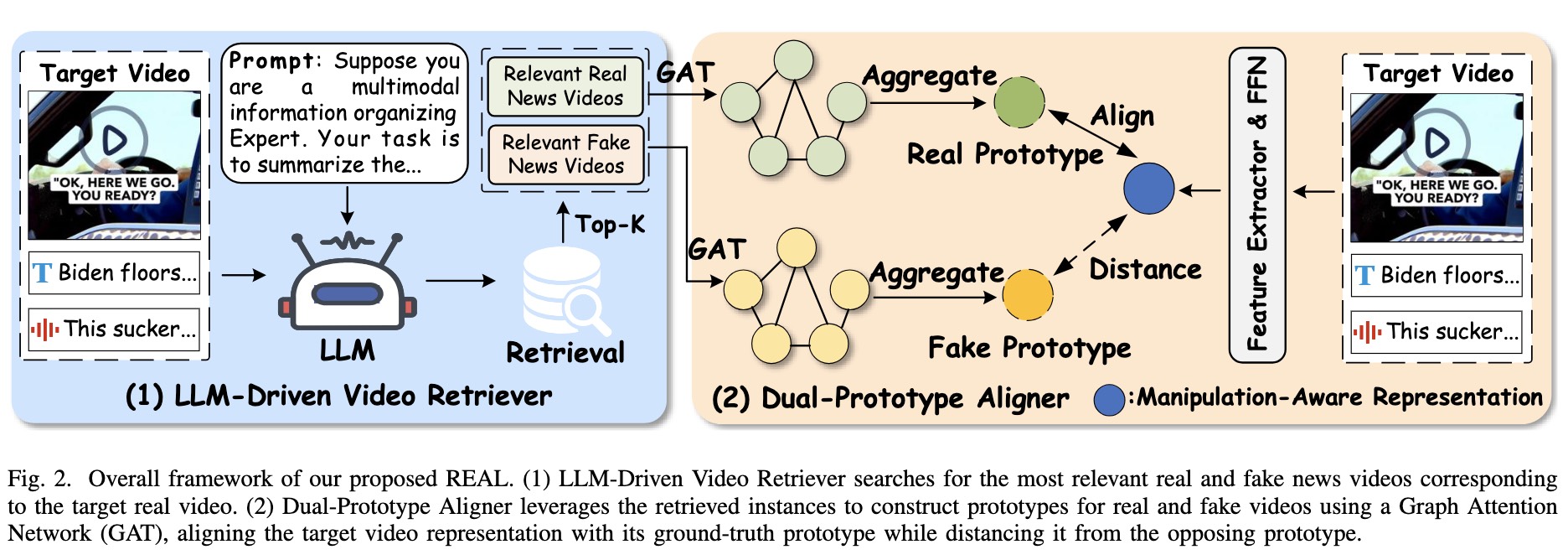

REAL: Retrieval-Augmented Prototype Alignment for Improved Fake News Video Detection

Yili Li, Jian Lang, Rongpei Hong, Qing Chen, Zhangtao Cheng, Jia Chen, Ting Zhong, Fan Zhou†

ICME 2025 | CCF B | PDF | Github

- REAL, a novel model-agnostic framework that generates manipulation-aware representations to enhance existing methods in detecting fake news videos with only subtle modifications to the original authentic ones.

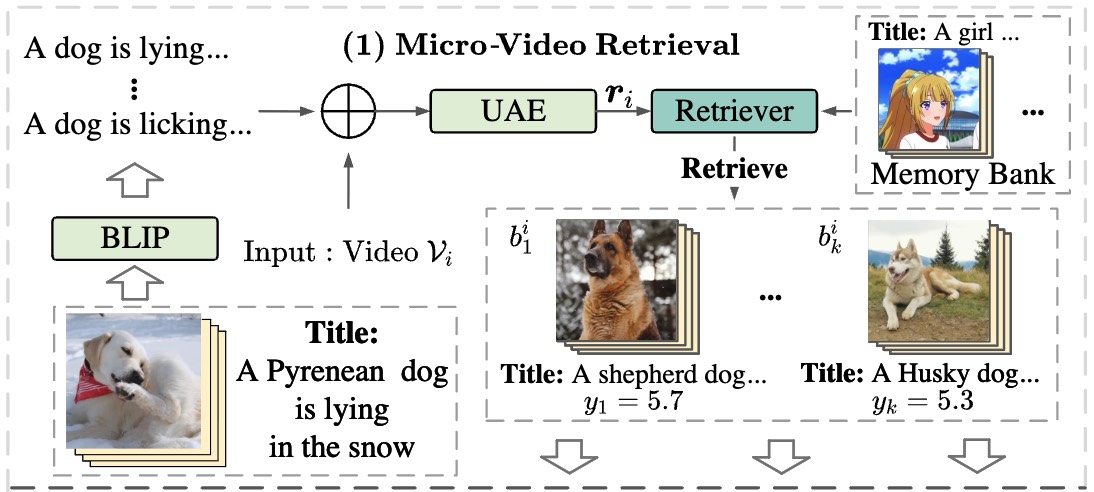

Predicting Micro-video Popularity via Multi-modal Retrieval Augmentation

Ting Zhong, Jian Lang, Yifan Zhang, Zhangtao Cheng, Kunpeng Zhang, Fan Zhou†

SIGIR 2024 | CCF A | PDF | Github |

- MMRA, a multi-modal retrieval-augmented popularity prediction model that enhances prediction accuracy using relevant retrieved information.

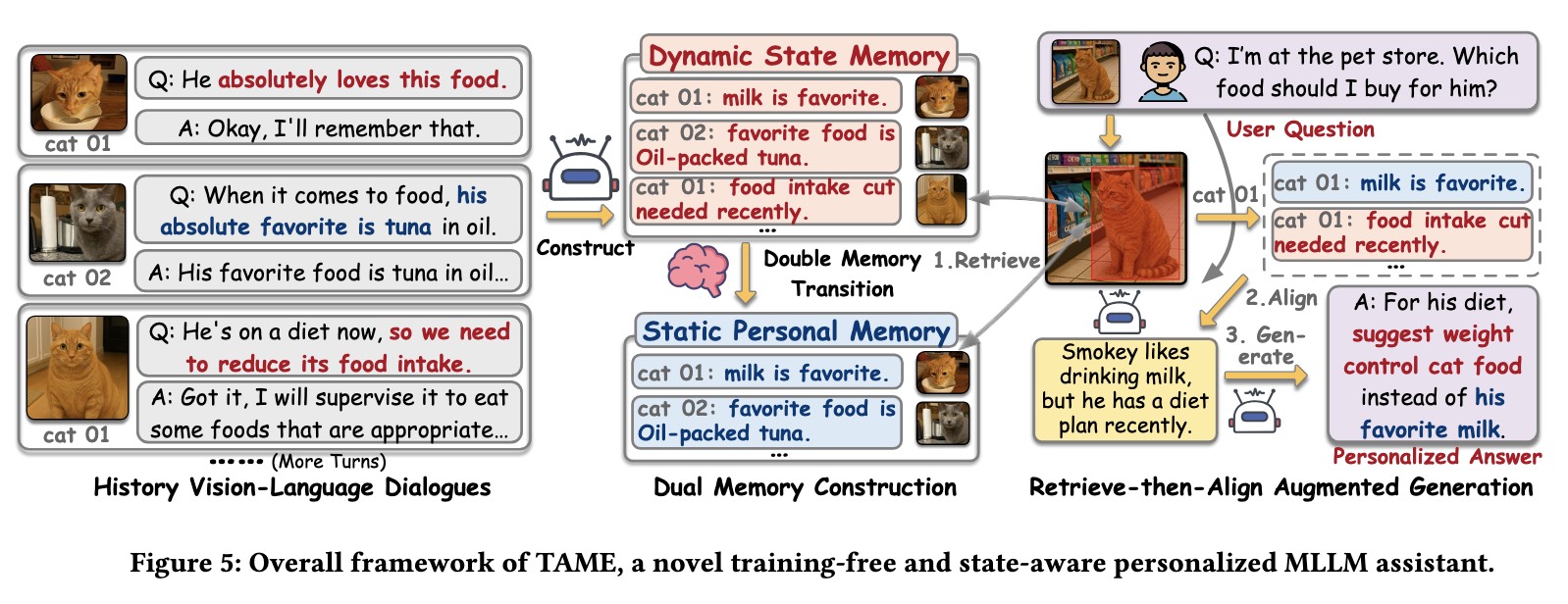

🧑🦱 Multimodal Large Language Model Personalization

🎖 Honors and Awards

- 2025.10 National Scholarship (Top 1%)

- 2025.10 Master’s Student Academic Scholarship (1st Division, Ranked 1st)

- 2024.10 National Scholarship (Top 1%)

- 2024.10 Master’s Student Academic Scholarship (1st Division, Ranked 1st)

- 2023.12 Artificial Intelligence Algorithm Challenge Runner-up (2nd), hosted by People’s Daily Online

📖 Educations

- 2023.09 -, Master, University of Electronic Science and Technology of China

- 2019.09 - 2023.06, Undergraduate, Fuzhou University

📝 Peer Review

- Conference Review: AAAI 2026 Reviewer

- Journal Review: IJCV, TPAMI, KBS, ESWA Reviewer

💻 Internships

- 2022.03 - 2022.06, Ruijie Networks, Software Development Intern.